Data Center OS

In late 1999, Marc Andreessen, one of the co-founders of Netscape and Loudcloud (Opsware), declared to a handful of engineers huddled around a large table, standing distinct from all the discarded computer boxes, from jumbled and twisted wires connected to the rack of blinking servers that hosted our initial website:

We want to build the data operating system for the data center.

He further decreed: we want to manage, configure and monitor all systems in our data center.

At the time, the acronym IaaS was unknown. Provisioning tools such as Puppet, Chef, and Ansible were primordial fluids. A handful of us built his vision as our version of IaaS (the Way, The Truth, The Cog, The Nursery, and MyLoudlcoud) and offered, then, to the dot.coms of that era as a managed service—we provisioned, managed and monitored their entire stack: webservers, app servers, databases, and storage.

What Was Missing?

Marc’s vision was prescient then. What he didn’t mention was that we want to manage and orchestrate all of data center’s collective compute, disk and network resources; what he didn’t include was the desire to offer collection of nodes’ resources in the data center as a single entity—with no general distinctions, with no partial partitioning, with no labeling. That is, let’s treat all the servers as “herds of cattle,” not as distinct “named cats.”

Data Center As a Single Computer

That latter vision, the data center OS and the entire data center as a single computer, I see today realized and manifested in Mesosphere’s DCOS, built upon Apache Mesos. Its co-founder and creator Benjamin Hindman makes a persuasive case with resonating analogies of Mesos to the UNIX Kernel, its accompanying components Marathon and Chronos to UNIX’s init.d and cron respectively, its container isolation and protection to UNIX’s user spaces, its resource allocation to UNIX kernel’s scheduling, as though all cluster nodes were coalesced into a single computer. And so does David Greenberg in his talk about the evolution of Mesos.

What’s more, the most attractive feature for me as a developer (and having worked in the Ops world) is the ease with which you can develop distributed applications on Mesos, the ease with which you can deploy and dynamically scale your apps—whether you request the resources yourself (by writing a Mesos Framework scheduler) or you delegate and deploy them using Marathon’s REST APIs with JSON description (by packaging your apps into Docker container).

To me that idea may approximate Peter Thiel’s Zero-to-one innovation. It clearly differentiates: The innovative brilliance in any software or framework or language platform is its simplicity and clarity through abstraction, its unified SDK in languages endearing to developers.

Consider UNIX (it provided system API to its developers); take UNIX kernel (it offered SDK for myriad device-drivers plugins); look to Apache Spark (it has single, expressive API and SDK, to process data at scale); and review the Java and J2EE, what borne out of it. A SDK, like a programming language, has to have a “feel to it,” as James Gosling famously wrote almost two decades ago.

Does Mesos Have a “Feel to It”?

I think so. Let’s explore that by an example. Most of you at some point while learning a new language have dabbled with the putative “Hello World!” examples. In C, you wrote sprint (“%s”, “Hello World\n”); in Java, System.out.println (“Hello World!”); and in Python print “Hello World!”

In todays, complex and distributed world of computing, writing code, where junks of it are shipped to nodes in a cluster to perform a well-defined task, is not easy. Writing an equivalent distributed Hello World examples, such as Word Count or distributed command executor or crawler, takes sometime, some knowledge, some confidence to overcome the daunting task of distributed programing.

But some frameworks inherently have an easy way and feel to writing distributed apps. Hadoop’s MapReduce, for instance, is not one of them (though it has its purpose and role—and does a phenomenal job for large-scale batch processing). Apache Spark has an easy feel to it, so is Apache Mesos: the APIs and SDK make it so.

Mesos’ Hello World!

Suppose if I wanted to write a simple distributed app that simply executes a pre-defined or specified command on my cluster. I could iterate over all the hosts using ssh; I could also create a crontab on every host.

But the scheme does not scale, when I have hundreds of nodes and when some nodes are out of circulation due to upgrades.

One option, you write a distributed command scheduler using Mesos Framework SDK. Second option, you employ Marathon framework and use its scheduler to execute desired command on the cluster. And the third option, you create a Cronos entry for the desired command, and let each of the elected option’s frameworks handle orchestration via Mesos.

Option 1: Writing a Mesos Framework Scheduler

As a developer of a Mesos framework scheduler, you implement two interfaces (or principal classes) and override its methods as required. Together, they coordinate all the scheduling and executing tasks.

In the Scheduler Interface, you must at least implement the following methods:

- public void resourceOffers(…);

- public void statusUpdate(…);

- public void frameworkMessage(…);

- public void registered(…);

For the Executor Interface, you should at most implement three methods, and others as needed:

- public void launchTask(…);

- public void registered(…);

- public void frameworkMessage(…);

Two-way Interaction between the Scheduler and Executor

Implemented and built on the principles of a UNIX kernel, Mesos abstracts all the compute resources of the cluster—CPUs, Memory, Disk, Ports—and orchestrates a two-way scheduling through messages, events, resource offers, and status updates.

This two-way scheduling provides a level of abstraction or indirection between the scheduler framework, Mesos, and the slave nodes. There are two reasons for this approach. First, the level of indirection provides common functionality every new distributed systems re-implements, such as failure detection, task distribution, and task life cycle. By providing an API that leverages this functionality, developers don’t have to re-implement—it’s taken care of. Second, this two-level abstraction enables running multiple distributed systems’ tasks on the same cluster of nodes and dynamically sharing resources—it enables multi-tenancy and resource optimization.

Using protocol buffers, the slave nodes communicate in this two-way manner to Mesos Master, which then passes messages up to the Framework scheduler. Likewise, the framework scheduler sends launch requests for the resources accepted to the Mesos Master, which then delegates them to the slave nodes, where actual tasks are performed.

Command Sampler and DNS Crawler

I have implemented these classes, and you can examine them on my github. The README explains the steps to spin up Mesos master and slave nodes, with Marathon, Cronos, and Mesos installed and configured. You will need a Virtual Box, though.

Also, I have an another example of a framework scheduler with two executors called mesos-dns-crawler.

(But first, I urge that you watch the aforementioned YouTube presentation links from Ben and David: they will provide a conceptual context.)

The diagram below depicts a high-level view of how framework schedulers’ implemented classes interact: available resources are offered from the slave nodes to the framework via the Mesos Master; accepted offers are sent to slave nodes via Mesos master as launch requests for tasks; and status updates and messages percolate back to the scheduler framework.

Option 2: Deploying a Distributed Application in Marathon

Marathon is a Mesos framework, and it’s analogous to cluster-wide init.d for any application or service deployed via this framework. Since it’s implemented as scheduler, it orchestrates all tasks. There are two ways to deploy an application or service on Marathon. One is to use the REST API, and another is its easy-to-use GUI.

In above example, we need a JSON description of our command sampler:

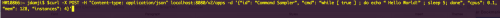

Next, with Marathon running on your cluster, you can use the REST endpoint to deploy on the cluster. At any point you can scale up or down the number of instances. For example,

will deploy four instances of “Command Sampler” on nodes in the cluster.

Similarly, you can deploy the task using the Marathon GUI. The screen shots show a) configuring the service and b) scaling the instances deployed.

Option 3: Delegating in Cronos

Finally, you can use the third option to accomplish the same task with Chronos. Using its simple GUI, you can configure a command or an executable you want executed as a task at periodic intervals on each node in the cluster.

Conclusion

So far I’ve shared three distinct ways to deploy a distributed application on Apache Mesos. All three ways use the resources of a cluster, either by an explicit framework scheduler, by Marathon’s framework cluster-wide scheduler, and by Chronos’ framework scheduler.

From these simple yet illustrative examples, you can see the ease with each you can deploy any distributed application in the cluster in more ways than one, whether an application is a simple command or a complex crawler or data processor engine with multiple executor tasks. You can “feel” its potential and power.

Such simplicity at scale for managing thousands of nodes’ compute resources in the cluster at data center or in the cloud as a single logical computer, such ease of programmability and flexibility at distributed application developers’ and devops’ disposal may approach innovation that takes us from Zero to One!

Resources

In this blog, I did not explore the failover, resiliency or high-availability due to failures on part of Executors or Frameworks or Mesos Master. Nor did I comment on optimization or reservation of cluster resources, but the links below expound many aspects of it. I encourage that you peruse them.